This document is a user-friendly manual on how to use the Treefit package, which is the first toolkit for quantitative trajectory inference using single-cell gene expression data. In this tutorial, we demonstrate how to generate and analyze two kinds of toy data with the aim to help get familiar with the practical workflow of Treefit. After learning some basics, we will use Treefit to perform more biologically interesting analysis in the next tutorial (vignette("working-with-seurat")).

1. Generating toy data

While the Treefit package has been developed to help biologists who wish to perform trajectory inference from single-cell gene expression data, Treefit can also be viewed as a toolkit to generate and analyze a point cloud in d-dimensional Euclidean space (i.e., simulated gene expression data).

Treefit provides some useful functions to generate artificial datasets. For example, as we will now demonstrate, the function treefit::generate_2d_n_arms_star_data() creates data that approximately fit a star tree with the number of arms or branches; the term star means a tree that looks like the star symbol “*”; for example, the alphabet letters Y and X can be viewed as star trees that have three and four arms, respectively.

The rows and columns of the generated data correspond to data points (i.e., n single cells) and their features (i.e., expression values of d different genes), respectively. The Treefit package can be used to analyze both raw count data and normalized expression data, but regarding the production of toy data, it is meant to be used to generate continuous data like normalized expression values.

Importantly, we can generate data with a desired level of noise by changing the value of the fatness parameter of this function. For example, if you set the fatness parameter to 0.0 then you will get precisely tree-like data without noise. By contrast, setting the fatness to 1.0 gives very noisy data that are no longer tree-like. In this tutorial, we deal with two types of toy data whose fatness values are 0.1 and 0.8, respectively. We note that Treefit can be used to generate and analyze high dimensional datasets but we focus on generating 2-dimensional data to make things as simple as possible in this introductory tutorial.

1.1. Tree-like toy data

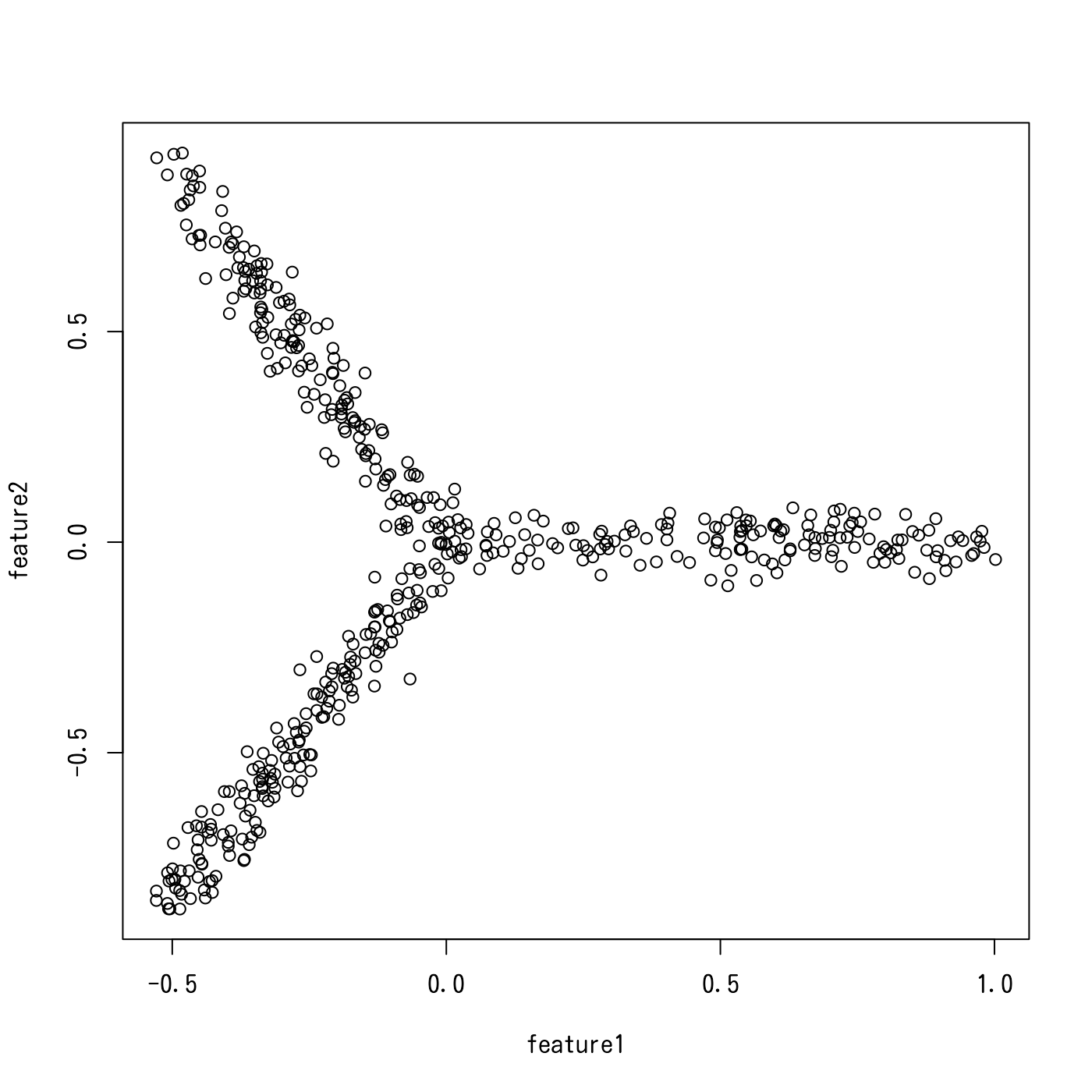

Let us first generate 2-dimensional tree-like data that contain 500 data points and reasonably fit a star tree with three arms. We can create such data and draw a scatter plot of them (Figure 1) simply by executing the following two lines of code:

star.tree_like <- treefit::generate_2d_n_arms_star_data(500, 3, 0.1)

plot(star.tree_like)

Figure 1. A scatter plot of tree-like toy data generated by Treefit

1.2. Less tree-like, noisy toy data

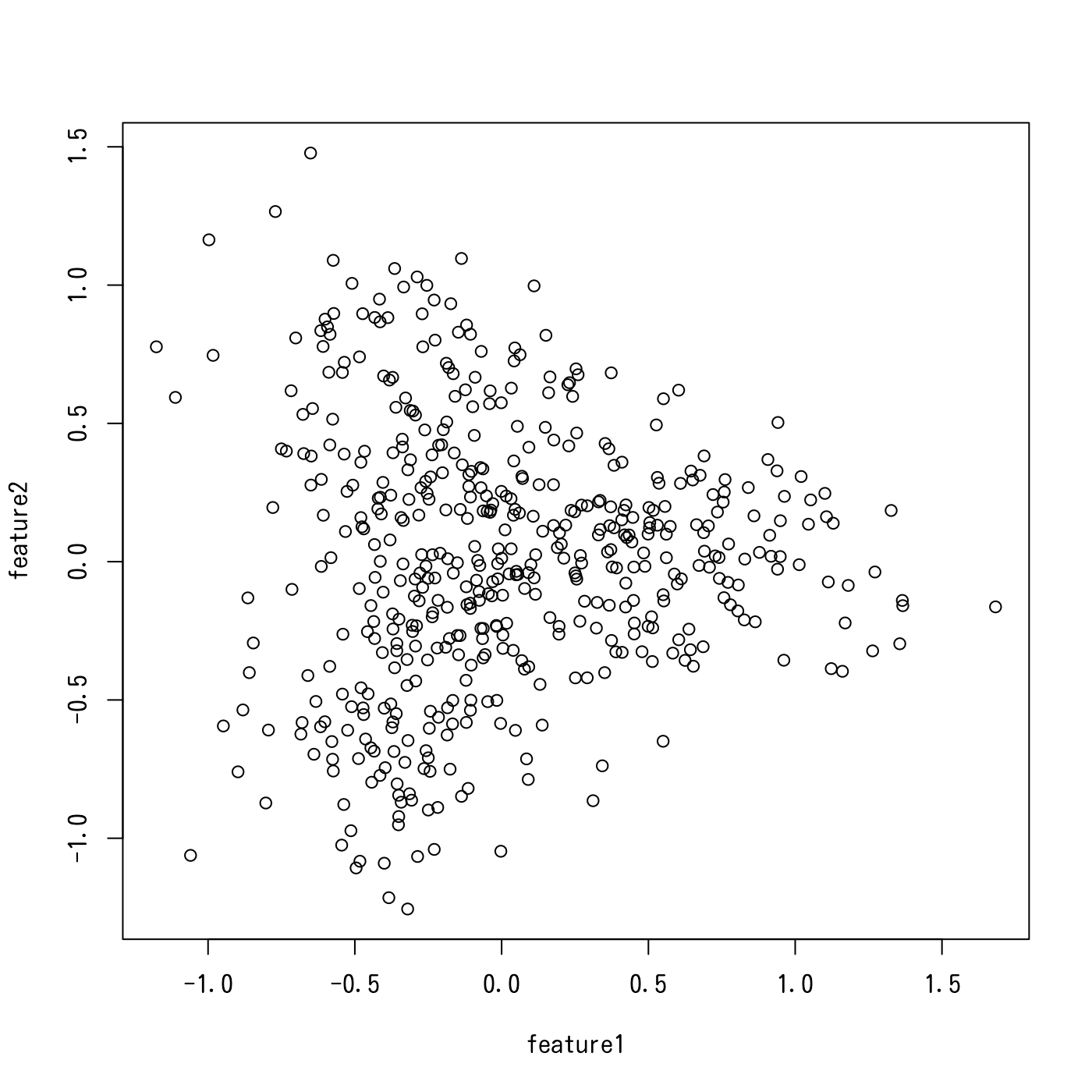

Similarly, we can generate noisy data that do not look so tree-like as the previous one. In this tutorial, we simply change the value of the fatness parameter from 0.1 to 0.8 in order to obtain non-tree-like data. The following two lines of code yields a scatter plot shown in Figure 2:

star.less_tree_like <- treefit::generate_2d_n_arms_star_data(500, 3, 0.8)

plot(star.less_tree_like)

Figure 2. A scatter plot of noisy toy data generated by Treefit

2. Analyzing the data

Having generated two datasets whose tree-likeness are different, we can analyze each of them. The Treefit package allows us to estimate how well the data can be explained by tree models and to predict how many “principal paths” there are in the best-fit tree. As shown in Figure 1, the points in the first data clearly form a shape like the letter “Y” and so the data are considered to fit a star tree with three arms very well, whereas Figure 2 indicates that the second data are no longer very tree-like because of the high level of added noise. Our goal in this tutorial is to reproduce these conclusions by using Treefit.

2.1. Tree-like toy data

Let us estimate the goodness-of-fit between tree models and the first toy data. This can be done by using treefit::treefit() as follows. The name parameter is optional but we should specify it, if possible. Because it’s useful to identify the estimation.

fit.tree_like <- treefit::treefit(list(expression=star.tree_like),

name="tree-like")

# Save the analytsis result to use other tutorials.

saveRDS(fit.tree_like, "fit.tree_like.rds")

fit.tree_like## $name

## [1] "tree-like"

##

## $max_cca_distance

## p mean standard_deviation

## 1 1 0.18860598 0.112087294

## 2 2 0.05734910 0.006932817

## 3 3 0.05028274 0.006170543

## 4 4 0.04734358 0.006528380

## 5 5 0.04570173 0.006737911

## 6 6 0.04366379 0.006193305

## 7 7 0.04003216 0.006509366

## 8 8 0.03889504 0.006438062

## 9 9 0.03777213 0.006137765

## 10 10 0.03642294 0.006309629

## 11 11 0.03568197 0.006243237

## 12 12 0.03442762 0.006171836

## 13 13 0.03354193 0.006166322

## 14 14 0.03247914 0.006430830

## 15 15 0.03156625 0.005902780

## 16 16 0.03089184 0.006015070

## 17 17 0.03042155 0.006119202

## 18 18 0.03001159 0.006023056

## 19 19 0.02884276 0.005819269

## 20 20 0.02790484 0.005380303

##

## $rms_cca_distance

## p mean standard_deviation

## 1 1 0.18860598 0.11208729

## 2 2 0.07706869 0.01352671

## 3 3 0.10971008 0.02013602

## 4 4 0.17194455 0.04471201

## 5 5 0.16888451 0.01674156

## 6 6 0.18068421 0.01660333

## 7 7 0.20414822 0.02973250

## 8 8 0.21943624 0.02177501

## 9 9 0.26109335 0.02527100

## 10 10 0.30830288 0.03879098

## 11 11 0.32295636 0.02381525

## 12 12 0.33768563 0.02718667

## 13 13 0.35599976 0.02567791

## 14 14 0.37155751 0.03141696

## 15 15 0.38831341 0.02749337

## 16 16 0.40683549 0.02607011

## 17 17 0.43104143 0.02428271

## 18 18 0.43955155 0.02075421

## 19 19 0.45054377 0.02611018

## 20 20 0.45909418 0.02687859

##

## $n_principal_paths_candidates

## [1] 3 6

##

## attr(,"class")

## [1] "treefit"treefit::treefit() returns a treefit object that summarizes the analysis of Treefit. We will explain how to interpret the results in the next section. For now, we may focus on learning how to use Treefit.

As we will see later, it is helpful to visualize the results using plot(). By executing plot(fit.tree_like), we can obtain the following two user-friendly visual plots, which makes it easier to interpret the results of the Treefit analysis.

plot(fit.tree_like)

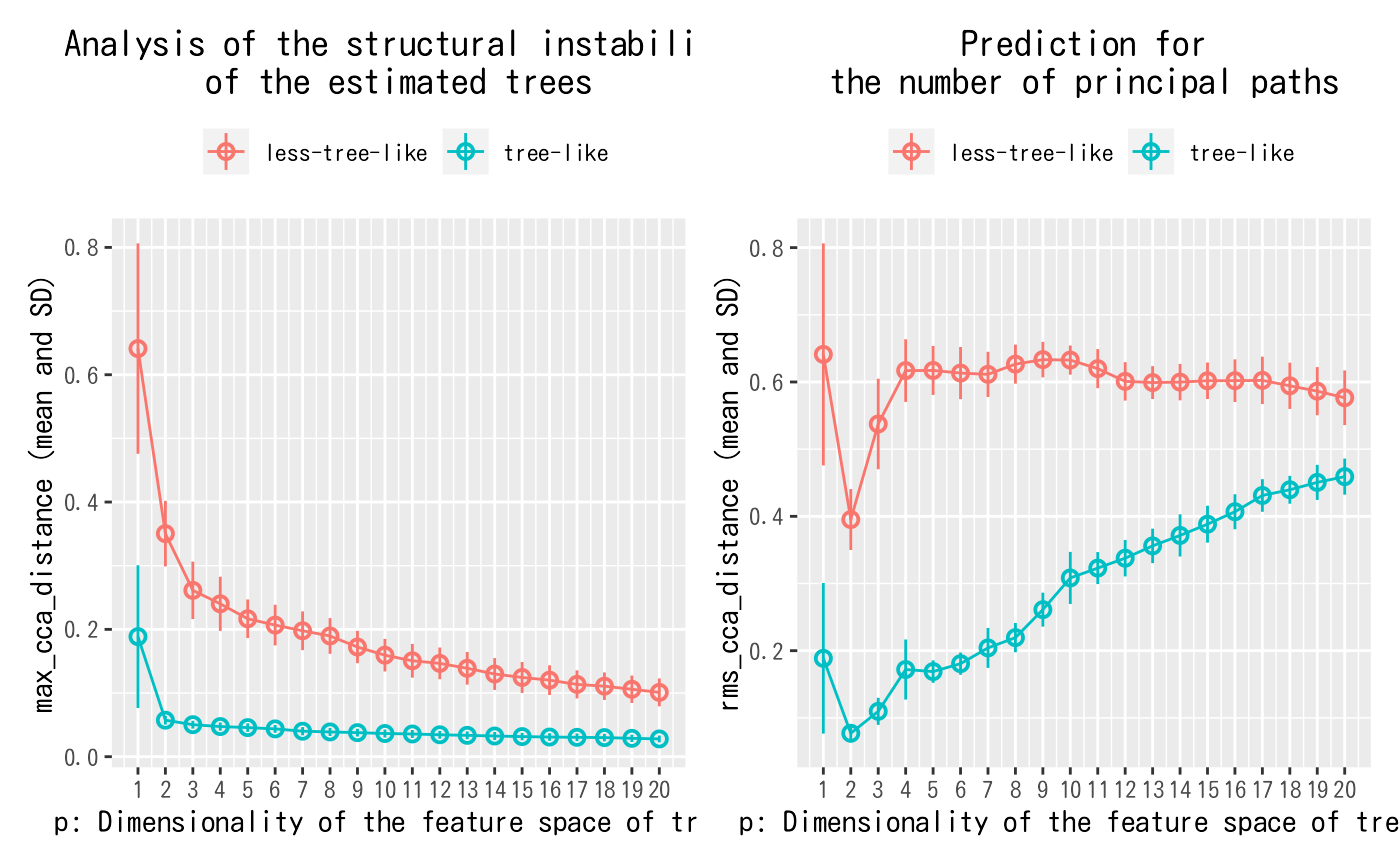

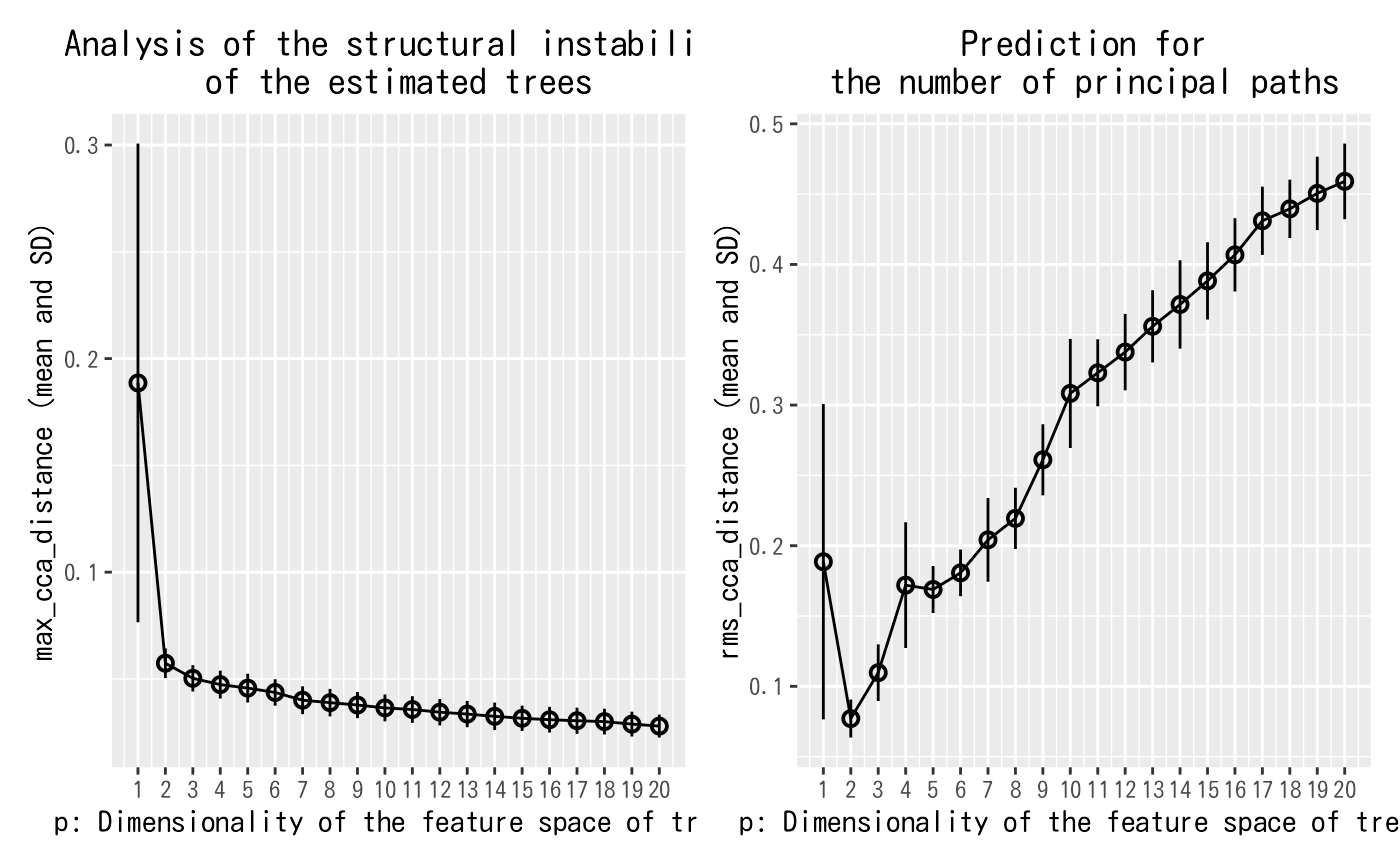

Figure 3. The output for the tree-like data shown in Figure 1

2.2. Less tree-like, noisy toy data

We can analyze the second toy data in the same manner.

fit.less_tree_like <- treefit::treefit(list(expression=star.less_tree_like),

name="less-tree-like")

# Save the analytsis result to use other tutorials.

saveRDS(fit.less_tree_like, "fit.less_tree_like.rds")

fit.less_tree_like## $name

## [1] "less-tree-like"

##

## $max_cca_distance

## p mean standard_deviation

## 1 1 0.6410779 0.16530427

## 2 2 0.3503794 0.05149123

## 3 3 0.2612743 0.04514520

## 4 4 0.2401434 0.04251065

## 5 5 0.2166526 0.03032552

## 6 6 0.2067030 0.03195008

## 7 7 0.1978107 0.03042195

## 8 8 0.1896353 0.02809781

## 9 9 0.1722665 0.02535623

## 10 10 0.1594339 0.02559466

## 11 11 0.1506703 0.02653699

## 12 12 0.1465941 0.02491429

## 13 13 0.1388525 0.02562315

## 14 14 0.1298897 0.02500551

## 15 15 0.1244570 0.02428170

## 16 16 0.1202338 0.02334609

## 17 17 0.1135588 0.02206775

## 18 18 0.1107619 0.02161223

## 19 19 0.1058824 0.02154103

## 20 20 0.1010044 0.02193400

##

## $rms_cca_distance

## p mean standard_deviation

## 1 1 0.6410779 0.16530427

## 2 2 0.3952325 0.04530719

## 3 3 0.5374504 0.06735476

## 4 4 0.6169260 0.04663143

## 5 5 0.6171774 0.03651230

## 6 6 0.6132443 0.03881056

## 7 7 0.6113552 0.03363042

## 8 8 0.6265815 0.02904500

## 9 9 0.6332530 0.02639997

## 10 10 0.6326131 0.02172755

## 11 11 0.6198775 0.02909744

## 12 12 0.6009167 0.02860706

## 13 13 0.5991288 0.02447413

## 14 14 0.5998079 0.02713869

## 15 15 0.6018131 0.02720529

## 16 16 0.6019081 0.03177225

## 17 17 0.6023813 0.03527602

## 18 18 0.5943385 0.03445902

## 19 19 0.5863388 0.03584081

## 20 20 0.5764867 0.04072415

##

## $n_principal_paths_candidates

## [1] 3 8 14

##

## attr(,"class")

## [1] "treefit"

plot(fit.less_tree_like)

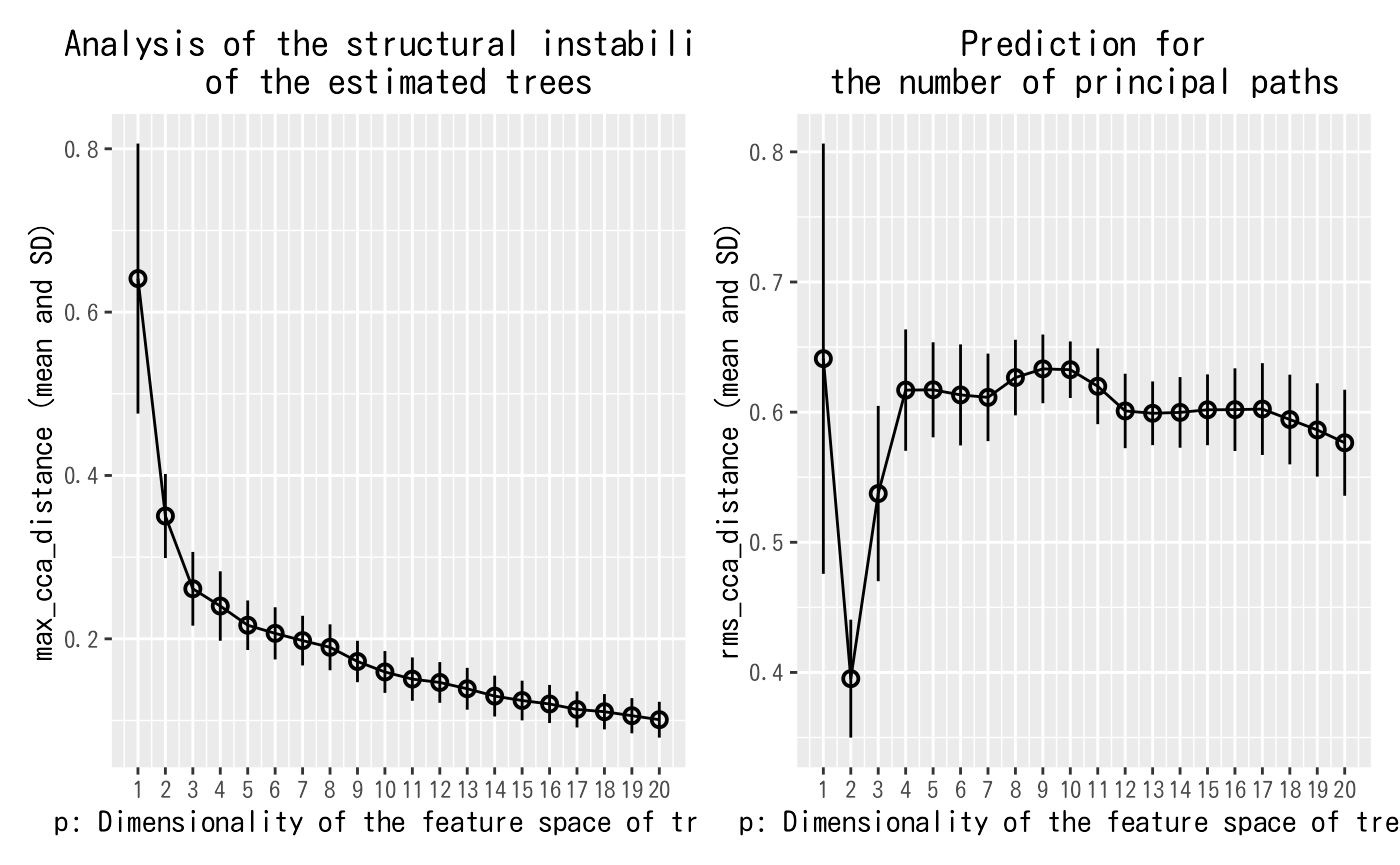

Figure 4. The output for the noisy data shown in Figure 2

3. Interpreting the results

Before interpreting the previous results, we briefly summarize the process of the Treefit analysis that consists of the following three steps.

First, Treefit repeatedly “perturbs” the input data (i.e., adds some small noise to the original row count data or normalized expression data) in order to produce many slightly different datasets that may have been acquired in the biological experiment.

Second, for each dataset, Treefit calculates a distance matrix that represents the dissimilarities between sample cells and then constructs a tree from each distance matrix. The current version of Treefit computes a minimum spanning tree (MST) that has been widely used for trajectory inference.

Finally, Treefit evaluates the goodness-of-fit between the data and tree models. The underlying idea of this method is that the structure of trees inferred from tree-like data tends to have high robustness to noise, compared to non-tree-like data. Therefore, Treefit measures the mutual similarity between estimated trees in order to check the stability of the tree structures. To this end, Treefit constructs a p-dimensional subspace that extracts the main features of each tree structure and then measuring mutual similarities between the subspaces by using a special type of metrics called the Grassmann distance. In principle, when the estimated trees are mutually similar in their structure, the mean and standard deviation (SD) of the Grassmann distance are small.

Although the word “Grassmann distance” may sound so unfamiliar to some readers, the concept appears in different disguises in various practical contexts. For example, the Grassmann distance has a close connection to canonical correlation analysis (CCA). Treefit provides two Grassmann distances $max_cca_distance and $rms_cca_distance that can be used for different purposes as we now explain.

3.1. Estimating the tree-likeness of data

The Treefit analysis using the first Grassmann distance $max_cca_distance (shown in the left panel of Figure 5) tells us the goodness-of-fit between data and tree models. In principle, as mentioned earlier, if the mean and SD of $max_cca_distance are small, then this means that the estimated trees are mutually similar in their structure. As can be observed, the distance changes according to the dimensionality p of the feature space, but $max_cca_distance has the property that the value decreases monotonically as p increases for any datasets.

Comparing the Treefit results for the two datasets, we see that the mean Grassmann distance for the first data does not fall below the second one regardless of the value of p and that the SD of the Grassmann distance for the first data is very small compared to the second data. These results imply that the estimated tree structures are very robust to noise in the first case but not in the second case. Thus, Treefit has verified that the first data are highly tree-like while the second data are not.

3.2. Predicting the number of principal paths in the underlying tree

The Treefit analysis using the other Grassmann distance $rms_cca_distance (shown in the right panel of Figure 5) is useful to infer the number of “principal paths” in the best-fit tree. From a biological perspective, this analysis can be used to discover a novel or unexpected cell type from single-cell gene expression (for details, see vignette("working-with-seurat")).

Unlike the previous Grassmann distance, the mean value of $rms_cca_distance can fluctuate depending on the value of p. Interestingly, we can predict the number of principal paths in the best-fit tree by exploring for which p the distance value reaches “the bottom of a valley” (i.e., attains a local minimum). More precisely, when $rms_cca_distance attains a local minimum at a certain p, the value p+1 indicates the number of principal paths in the best-fit tree. $n_principal_paths_candidates has these p+1 values. We don’t need to calculate them manually. When Treefit produces a plot having more than one valleys, the smallest p is usually most informative for the prediction. The smallest p+1 value can be obtained by $n_principal_paths_candidates[1].

Comparing the Treefit results for the two datasets, we first see that both plots attains a local minimum at p=2. This means that for both datasets the best-fit tree has p+1=3 principle paths, which is correct because both were generated from the same star tree with three arms. Another important point to be made is that the SD of the Grassmann distance for the first data is very small at p=2 compared to that for the second data; in other words, Treefit made this prediction more confidently for the first dataset than for the second one. This result is reasonable because the first dataset is much less noisy than the second one. Thus, Treefit has correctly determined the number of principal paths in the underlying tree together with the goodness-of-fit for each dataset.